Overview

A Bidirectional Reflectance Distribution Function is a function that defines how light is reflected at a point on an

opaque surface. It takes an incoming and outgoing light direction and returns the resulting radiance at the reflected

light direction. Physically based BRDFs also require positivity (the reflected light to always be positive), Helmholtz

reciprocity (the returned value of a BRDF with any two given incoming and outgoing light directions to be equal to the

value returned with the light directions swapped) and energy conservation (the total energy in the system to remain

constant). Although in practical implementations of physically based BRDFs these rules are sometimes neglected in favor

of computational speed when the visual benefits are minimal, at least when it comes to non-scientific graphical needs.

BRDF Lighting Function

$$\Large{f(l, v, n) = Diffuse(l, n) + Specular(l, v, n, h)}$$

$l$ := light direction

$v$ := view direction

$n$ := normal vector

$h$ := light view halfway vector

The currently most widespread BRDF that I also used in this project is the Cook-Torrance Term with the simple

Lambert Diffuse Term. The Lambert Term is very common even outside of physically based rendering and only

contributes little in terms of physically complex factors while the Cook-Torrance Term consists of three big subterms.

The Fresnel Term, Geometric Occlusion Term and Microfacet Distribution Term.

Lambert Diffuse Term

$$\Large{Diffuse(l,n) = l \cdot n}$$

$l$ := light direction

$n$ := normal vector

Cook-Torrance Specular Term

$$\Large{Specular(l, v, n, h) = {F(l, h) G(l, v, h) D(h) \over 4(n \cdot l)(n \cdot v)}}$$

$D$ := Distribution Term

$F$ := Fresnel Term

$G$ := Geometric Occlusion Term

$l$ := light direction

$v$ := view direction

$n$ := normal vector

$h$ := light view halfway vector

Fresnel Term

The Fresnel Term describes the reflection and transmission of light based on the refractive index of a given material and

the angle of incidence between the light and the normal. The contribution of the Fresnel Term closes to zero when the light

and normal direction are parallel to each other but as the angle becomes increasingly large, the amount of light that reflects

becomes greater. At 90° angle of incidence all of the incoming light is reflected, like a mirror, even if the material would

otherwise reflect light poorly at normal incidence. Hence this can be a quite unexpected phenomenon for some materials.

Fresnel Term (Schlick-Gauss)

$$\Large{F_{schlick\_gauss}(l, h) = F_0 + (1 - F_0) \times (1 - v \cdot h)^5}$$

$F_0$ := specular reflectance at normal incidence

$l$ := light direction

$h$ := light view halfway vector

Geometric Occlusion Term

Geometric Occlusion accounts for roughness at the microscopic level that causes some light not to be reflected due to very

tiny shadows being thrown, some parts to be occluded by other tiny parts and some light to be reflected from bouncing in a

a random way from an otherwise invisible direction. So the rougher a material is, the more it behaves diffusely and especially

decreases the specular light observed.

Geometric Occlusion Term (Schlick)

$$\Large{G_{schlick}(l, v, h) = G_1(n, l) \times G_1(n, v)}$$

$$\Large{G_1(n, v) = {2 \times (n \cdot v) \over (n \cdot v) + \sqrt{r^2 + (1 - r^2)(n \cdot v)^2}}}$$

$r$ := roughness

$l$ := light direction

$v$ := view direction

$h$ := light view halfway vector

Distribution Term

The last factor, the Distribution Term, predicts the distribution of microfacets. Most common surfaces have microfacets that

are distributed evenly around the normal and are called isotropic. Some surfaces, like brushed metal, can have a preference for

a certain direction of distribution along the surface and are called anisotropic. Both of these types can be modeled in this term

but some implementations only support isotropic surfaces.

Distribution Term (ggx)

$$\Large{D_{ggx}(h) = {r^4 \over \pi ((n \cdot h)^2 \times (r^4 - 1) + 1)^2}}$$

$r$ := roughness

$h$ := light view halfway vector

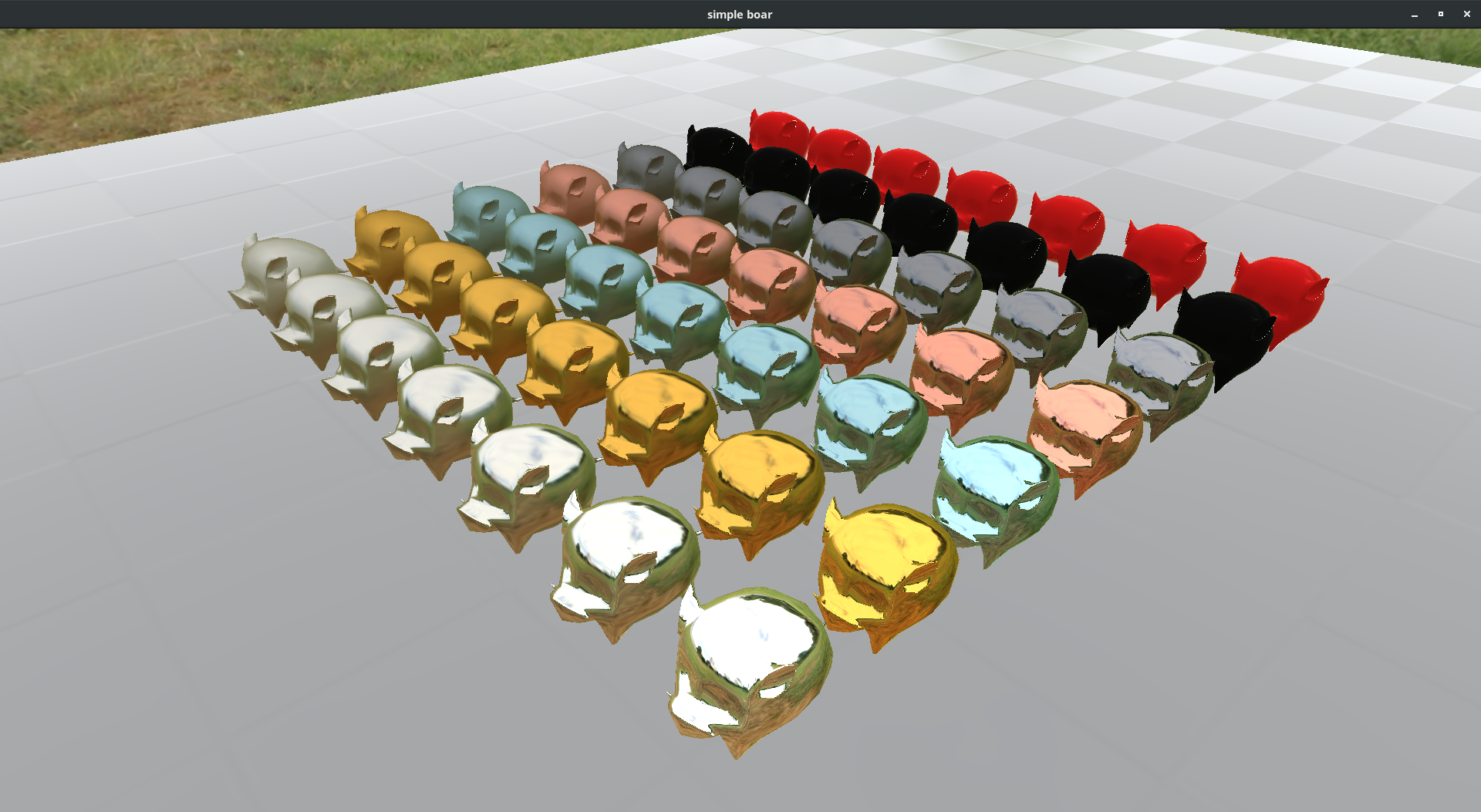

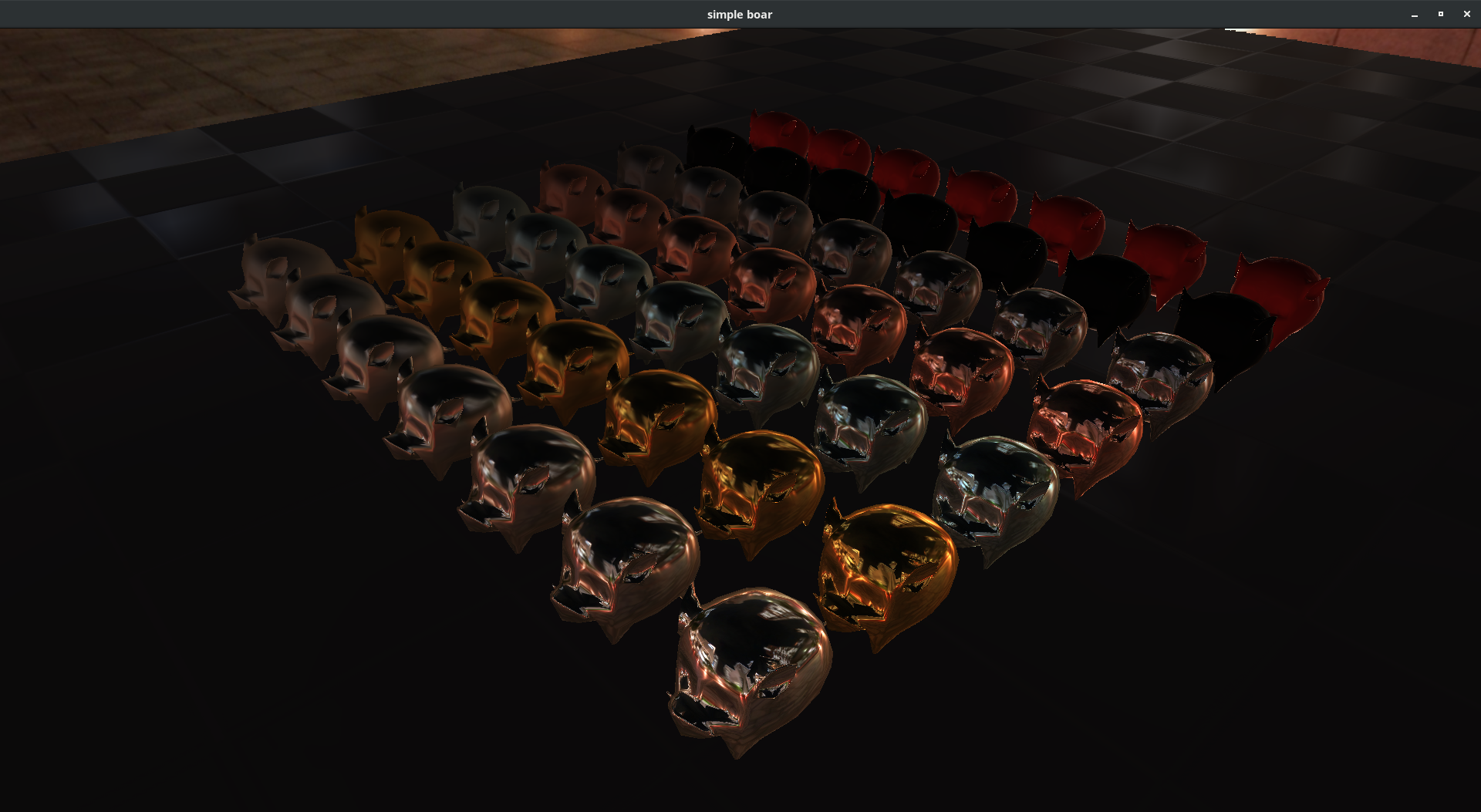

My choice of Functions

For many of these subterms there are different implementations with varying computational overhead, physical accuracy and

visual appeal. As a result most researchers and companies tend to handpick their own combination of implementations to suit

their current needs. As such this is also what I did, optimizing for performance first, visual appeal second and physical

accuracy third. You can see the names of the specific implementations I used in brackets above.