Introduction

This project and web page is the result of the "Fortgeschrittenenpraktikum" (IFP) started in SS 16 (Mai 2016) by Ömercan Yazici. The project is about implementing a ray-tracing approach, the Photon Mapping, into the existent software solution 'PearRay'. It's also about the underlying theory and technology of Photon Mapping, which was first introduced 1995 by Henrik Wann Jensen and further discussed in his own book: Realistic Image Synthesis Using Photon Mapping.

More information about PearRay and the underlying theory is available on the following sections.

Application

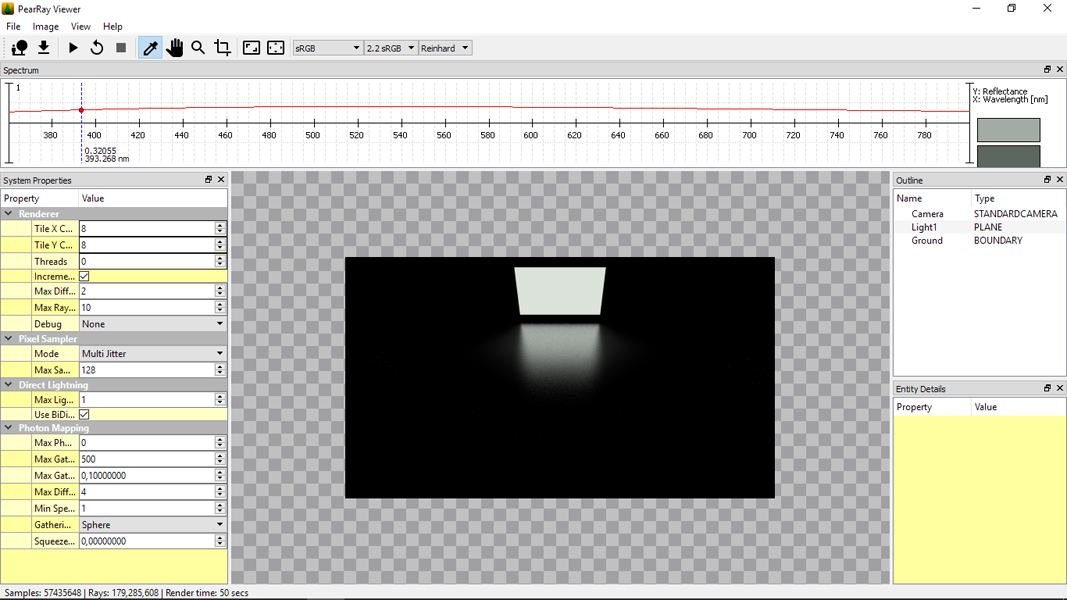

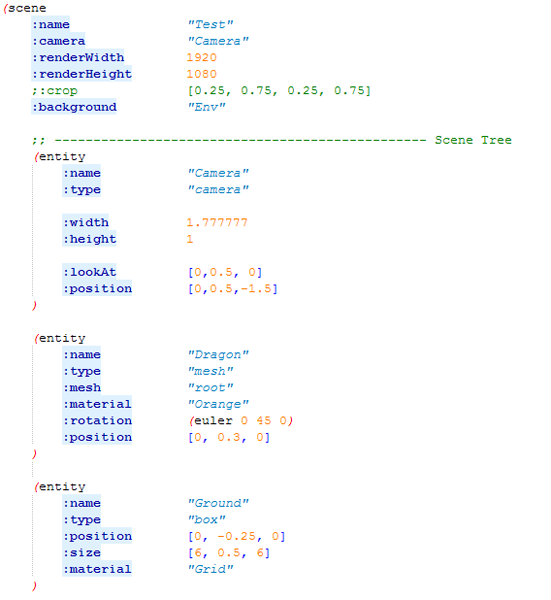

The development of the initial application was started in October 2015, but, due to a very long pause, had only the most basic function of ray-tracing till March 2016. The rework started alongside the IFP project on Mai 2016. It is still on active development, even while the IFP is already finished. The application is a software solution containing a main library (pr_lib), an utility library (pr_lib_utils) and an optional Qt based viewer (rayview). The optional viewer has a spectral graph for further analyzes of rendered pixel, supports cropping and re-rendering of custom areas and many more features needed to analyze and tweak scenes.

Currently PearRay features a lot of the basic and advanced features of common ray-tracer:

- High quality images based on Spectral Rendering and Multiple Importance Sampling.

- Two available integrators. (Distributed Raytracing, Bidirectional Raytracing)

- multi core and GPU based rendering (OpenCL).

- Depth of Field.

- OBJ meshes.

- Shaders based on OSL with Displacement Mapping.

- Resource Caching

- Motionblur

- Command Line Interface (PearCLI)

- OpenSubDiv

- B-Splines / NURBS

- pTex

More features are still in development and the full project is available on Github under a open source license. It also features a wiki and a web page.

Theory

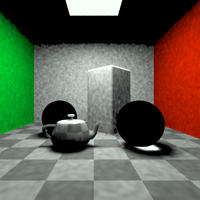

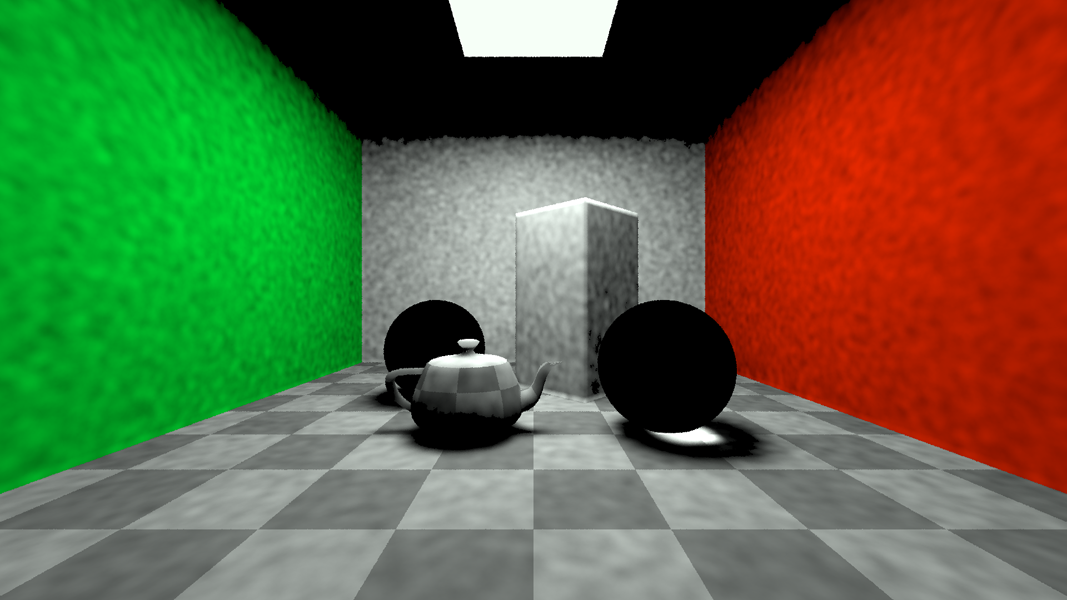

The first Photon Mapping algorithm was introduced 1995 by Henrik Wann Jensen as a two-pass global illumination algorithm:

- Photons from light sources are sent out into the scene and, while intersecting with diffuse surfaces, a photon event is stored in a map.

- Rendering the scene based on other algorithms (e.g. direct ray-tracing) while estimating radiance with the nearest photons around the surface point within a sphere.

Generally spoken the initial algorithm splits into three areas:

Photon Tracing

Photon Tracing is the first step in the first pass. In this step every light source (also emissive objects) sends out a calculated amount of photons into the scene. The amount of photons per light source is a user parameter and can depend on the size, intensity and/or importance of the light source. An optimization through Importance Sampling and the so called Projection Map is possible and reasonable.

Photon Storing

This part in the first pass happens only when a photon intersects a surface. The further advancement and life of the photon depends on the type of the surface:

- Specular surface: Reflect or refract the incoming photon, but don't store it.

- Diffuse surface: Store photon event information (Position, Direction, RGB/Spectral Power) into a map. Optionally the photon can be reflected based on the BRDF to simulate color bleeding.

The resulting map can be huge depending on the power representing (RGB or spectral). On average, e.g 1.000.000 stored photon events, the map has a memory print of approx. 26 Mb using a RGB power representation. This is not that much for current hardware, but the huge amount of photon events (> 1.000.000) can be a big problem. Therefore the so called photon map should be speed up by a KD-tree to improve the next pass; the "gathering".

Photon Gathering

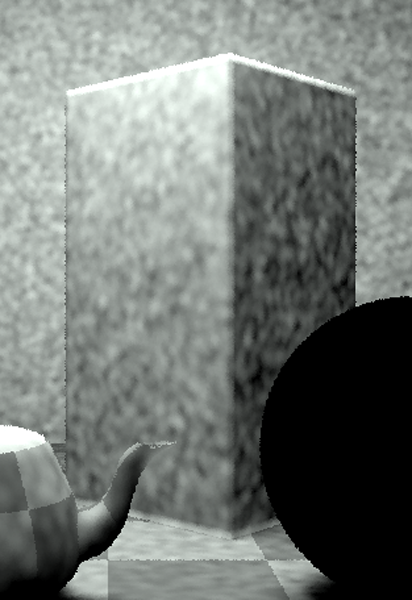

The second pass should be used together with another ray-tracing algorithm like direct ray-tracing. When a ray intersects with a surface and the BRDF is evaluated, additionally the indirect radiance is also evaluated by locating the nearest photons (photon events) in the photon map around the intersection point within a sphere. This radiance estimation can have invalid entries (e.g. from the other side of the plane), due to the geometry independent storing. A solution would be to compress the sphere into a disc along the surface normal.

Problems

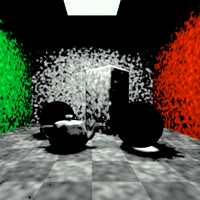

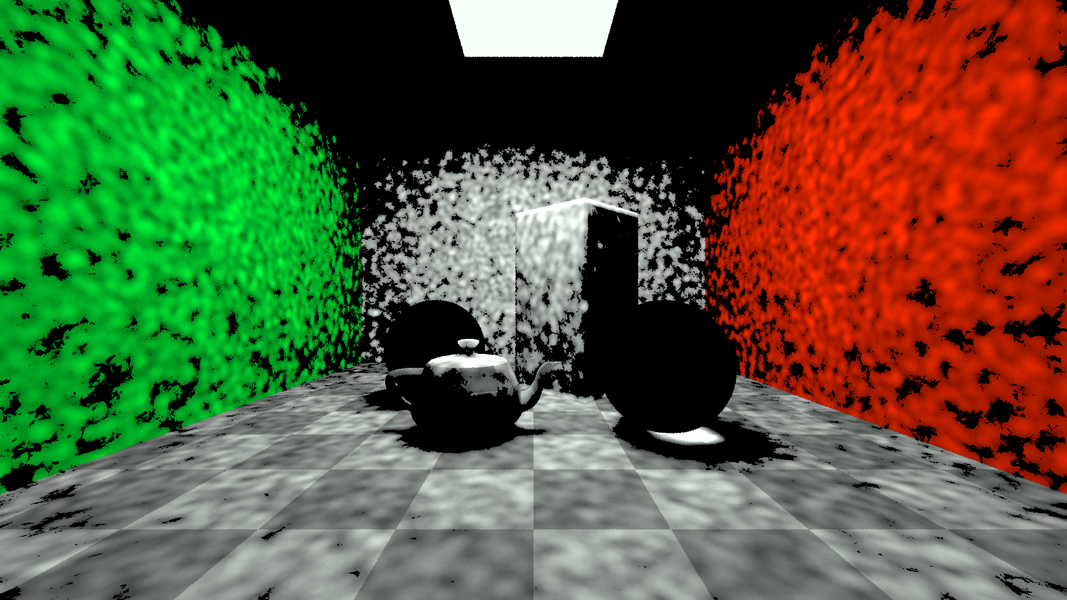

One of the biggest problem while using Photon Mapping is the huge amount of photons needed to produce a good estimation of indirect lightning. Also most of the time parameters have to be tweaked by try and error. There is no general parameter set working for every scene, except setting the amount of photons to nearly infinity. Regarding that, too few photons can produce bumpy artifacts and a too big radius can include unwanted photons from other surfaces. Unfortunately Photon Mapping is also a biased method. It does not produce the right result on average, but still converges into the right result, which Jensen proved on his paper.

Now the amount of photons has to be set higher!

About me

My name is Ömercan Yazici and I am a (Applied) Computer-Science (B.Sc.) student of the Ruprecht-Karls-University of Heidelberg in Germany. My main focus is on graphic and visualization algorithms, modern engine design, computer animation and numeric simulation.

More information about me is available on my personal web page: pearcoding.eu

or follow me directly on Twitter: @pearcoding