This page explains the meaning and usage of sensitivity concerning Wiiheadtrack. Generally sensitivity describes the ratio between the moving of the users head and the resulting moving of the virtual camera. The greater the sensitivity is configured, the intenser the virtual camera moves compared to the movement of the users head. There are two meanings of sensitivity:

These two meanings will be explained in the following:

This section is about sensitivity in the meaning explained above. You can set the sensitivitiy calling Wiiheadtrack::setSensitivity(float). Depending on the mode Wiiheadtrack is used with, the values of sensitivity differ:

The sensitivity in the object-mode describes the maximum angle, the virtual camera moves on the imaginary sphere around the object in booth directions. For example, calling

wiiht.setSensitivity(90.0);

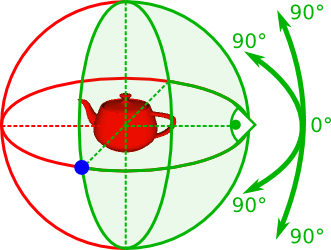

where wiiht is a Wiiheadtrack-object, allows moving on a hemisphere, 90 degree in each direction:

In this case, e. g. moving your head horizontal to the left border of the Wiimote-camera's imaginary screen (from the users point of view), positions the virtual camera to the blue dot in the image above.

Remark: Because of the way the position is computed and the minimal movement of the user's head -- even if he intends not to move --, it is almost impossible to hit the border of the virtual screen accurately and therefore to reach the outermost angle. You should increase the sensitivity slightly, if you want to reach this angle.

Background: The angles  and

and  of the virtual camera's spherical coordinates are computed by

of the virtual camera's spherical coordinates are computed by

![\[ \varphi = \frac{\texttt{sensitivity}}{180.0} \cdot \frac{\pi}{512} \cdot \texttt{eyeC[0]} \]](form_14.png)

and

![\[ \theta = \texttt{invY} \cdot \frac{\texttt{sensitivity}}{180.0} \cdot \frac{\pi}{384} \cdot \texttt{eyeC[1]}, \]](form_15.png)

where Wiiheadtrack::invY (  ) indicates, if the y-axis should be inverted,

) indicates, if the y-axis should be inverted,  is the half width/height of the Wiimote-camera's imaginary screen and Wiiheadtrack::eyeC (

is the half width/height of the Wiimote-camera's imaginary screen and Wiiheadtrack::eyeC ( ![$ \texttt{eyeC} \in [-512.0, 512.0] \times [-384.0, 384.0] \subset \mathbb{R}^2$](form_4.png) ) is the centre between the user's eyes, seen by the Wiimote-camera.

) is the centre between the user's eyes, seen by the Wiimote-camera.

Sensitivity in the room-mode describes the maximum translation from the center of the virtual camera on the plane in x- and y-direction. For example, calling

wiiht.setSensitivity(5.0);

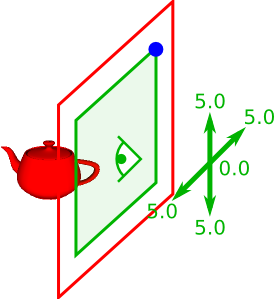

where wiiht is a Wiiheadtrack-object, allows moving on the following plane:

In this case, moving your head to the right upper corner of the Wiimote-camera's imaginary screen (from the users point of view), positions the virtual camera to the blue dot in the image above.

Remark: Because of the way the position is computed and the minimal movement of the user's head -- even if he intends not to move --, it is almost impossible to hit the border of the virtual screen accurately and therefore to reach the outermost angle. You should increase the sensitivity slightly, if you want to reach this angle.

Background: The virtual coordinates are computed by

![\[ \texttt{pos[0]} = \frac{\texttt{eyeC[0]}}{512.0} \cdot \texttt{sensitivity} \]](form_18.png)

and

![\[ \texttt{pos[1]} = \texttt{invY} \cdot \frac{\texttt{eyeC[1]}}{384.0} \cdot \texttt{sensitivity}, \]](form_19.png)

where Wiiheadtrack::invY (  ) indicates, if the y-axis should be inverted,

) indicates, if the y-axis should be inverted,  is the half width/height of the Wiimote-camera's imaginary screen and Wiiheadtrack::eyeC is the centre between the users eyes, seen by the the camera.

is the half width/height of the Wiimote-camera's imaginary screen and Wiiheadtrack::eyeC is the centre between the users eyes, seen by the the camera.

The term distance (in case of Wiiheadtrack) is used to describe, how intense forward and backward movement of the user moves the virtual camera.

Configurate the distance is a little bit more complicated, than configurate the sensitivity. The reason for this circumstance is, that the computed distance between the Wiimote-camera and the user's head depends on the distance between the two IR-LEDs. Therefore, you have to make some kind of calibration: Compile and run the program eye-distance.cpp in the folder eye-distance, using the command-line-command

make

or, if you already compiled it, and you want to run eye-distance.cpp again,

./eye-distance

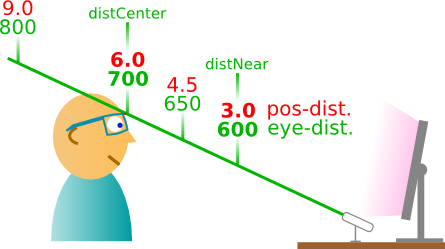

while you are in the folder eye-distance. The program helps you to detect the distance between the user and the Wiimote-camera, called eye-distance,

distCenter or eyeDist[0]), the user is sitting "relaxed" in front of the monitor and distNear or eyeDist[1]), the user wants to place himself towards the monitor, while using Wiiheadtrack.You just have to place yourself in the respective position and press the corresponding key ('c' for distCenter or 'n' for distNear).

In your program using Wiiheadtrack, it can be configured with Wiiheadtrack::setEyeDist(float, float). Expected the values are distCenter = 700.0 and distNear = 600.0, you should call

wiiht.setEyeDist(700.0, 600.0);

in your program, which sets corresponding the member variables:

eyeDist[0] = 700.0; eyeDist[1] = 600.0;

Secondly, you have to configure the virtual equivalent to the positions detected in the previous section, called position-distance. The distance between the eyes and the Wiimote-camera corresponds to

In both cases, you also have to specify two values:

distCenter or posDist[0]), the user is sitting "relaxed" in front of the monitor and distNear or posDist[1]), the user wants to place himself towards the monitor, while using Wiiheadtrack.In your program using Wiiheadtrack, it can be configured with Wiiheadtrack::setPosDist(float, float). Expected you are using the object-mode and the favoured values are distCenter = 6.0 and distNear = 3.0, you should call

wiiht.setPosDist(6.0, 3.0);

in your program, which sets corresponding the member variables:

posDist[0] = 6.0; posDist[1] = 3.0;

After configuring the eye- and position-distance, Wiiheadtrack maps the current eye-distance to the position-distance with the help of a linear function. Setting the values choosen above

wiiht.setEyeDist(700.0, 600.0); wiiht.setPosDist(6.0, 3.0);

leads to the following result:

Background: The parameters ![$m = \texttt{posDistPar[0]}$](form_20.png) and

and ![$d = \texttt{posDistPar[1]}$](form_21.png) of the linear function

of the linear function  are computed by

are computed by

![\begin{eqnarray*} \texttt{posDistPar[0]} &=& \frac{\texttt{posDist[1]}-\texttt{posDist[0]}}{\texttt{eyeDist[1]}-\texttt{eyeDist[0]}} \\ \texttt{posDistPar[0]} &=& \texttt{posDist[0]} - \texttt{posDistPar[0]} \cdot \texttt{eyeDist[0]}. \end{eqnarray*}](form_22.png)

Then, the radius of the imaginary sphere (object-mode) or the z-value of the virtual camera position (room-mode) are computed by

![\[ \texttt{posDistPar[0]} \cdot \texttt{eyeZ} + \texttt{posDistPar[1]}. \]](form_23.png)

1.7.1

1.7.1