|

Kiretu

|

|

Kiretu

|

C++-class which represents a point-cloud of Microsoft's Kinect and allows the reconstruction of a point-cloud applying the steps explained at the documentation. More...

#include <KinectCloud.h>

Public Member Functions | |

| KinectCloud () | |

| Constructor of the KinectCloud-class. | |

| ~KinectCloud () | |

| Destructor of the KinectCloud-class. | |

| void | addZValues (int *zValues) |

| Adds the z-values of a Kinect depth-image grabbed by FrameGrabber and stores them as a point-cloud (std::vector< std::vector<float> >). | |

| void | addRgbValues (int *rgbValues) |

| Adds the RGB-values of a Kinect RGB-image grabbed by FrameGrabber and stores them as a point-cloud (std::vector< std::vector<float> >). | |

| void | rawToMeter () |

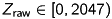

Converts the added depth-values of the Kinect (  ) into meter using the formula ) into meter using the formula

which was experimentaly determined (http://nicolas.burrus.name/index.php/Research/KinectCalibration). | |

| void | depthToCloud (std::vector< std::vector< float > > depthCam) |

Applies the intrinsic camera-parameters to the point-cloud. The parameters should be imported using YMLParser. With the help of these parameters, each point  of the depth-image with its depth-value of the depth-image with its depth-value  is projected on its equivalent point is projected on its equivalent point  in 3D-space regarding its z-value and the depth-camera-parameters. This is done with the following formula: in 3D-space regarding its z-value and the depth-camera-parameters. This is done with the following formula:

where | |

| void | applyExtrinsics (std::vector< std::vector< float > > rot, std::vector< float > trans) |

| Applies the extrinsic camera-parameters to the point-cloud. The parameters should be imported using YMLParser. This is done with a rotation and translation of each point:

where | |

| void | computeRgbMapping (std::vector< std::vector< float > > rgbCam) |

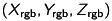

Computes the mapping between the point-cloud and the equivalent points of the RGB-image by reprojecting the point  of the point-cloud to its equivalent point of the point-cloud to its equivalent point  in the plane using the intrinsics of the RGB-camera imported by YMLParser. The projection is done with the following formula: in the plane using the intrinsics of the RGB-camera imported by YMLParser. The projection is done with the following formula:

| |

| std::vector< std::vector< float > > | getCloud () |

| Get the cloud. | |

| std::vector< std::vector< int > > | getCloudRgb () |

| Get the RGB-cloud. | |

| std::vector< std::vector< int > > | getCloudRgbMapping () |

| Get the cloud-RGB-mapping. | |

| std::vector< bool > | getValidValues () |

Get a vector which indicates if a point of the point-cloud is valid (  ). ). | |

| std::vector< bool > | getReconstructionSteps () |

| Get the reconstruction-steps. | |

Private Attributes | |

| std::vector< std::vector< float > > | cloud |

| std::vector< std::vector< int > > | cloudRgb |

| std::vector< std::vector< int > > | cloudRgbMapping |

| std::vector< bool > | validValues |

| std::vector< bool > | reconstructionSteps |

C++-class which represents a point-cloud of Microsoft's Kinect and allows the reconstruction of a point-cloud applying the steps explained at the documentation.

| KinectCloud::KinectCloud | ( | ) |

Constructor of the KinectCloud-class.

| KinectCloud::~KinectCloud | ( | ) |

Destructor of the KinectCloud-class.

| void KinectCloud::addRgbValues | ( | int * | rgbValues | ) |

Adds the RGB-values of a Kinect RGB-image grabbed by FrameGrabber and stores them as a point-cloud (std::vector< std::vector<float> >).

| rgbValues | Pointer to a  -dimensional array of RGB-values. -dimensional array of RGB-values. |

| void KinectCloud::addZValues | ( | int * | zValues | ) |

Adds the z-values of a Kinect depth-image grabbed by FrameGrabber and stores them as a point-cloud (std::vector< std::vector<float> >).

| zValues | Pointer to a  -dimensional array of z-values. -dimensional array of z-values. |

| void KinectCloud::applyExtrinsics | ( | std::vector< std::vector< float > > | rot, |

| std::vector< float > | trans | ||

| ) |

Applies the extrinsic camera-parameters to the point-cloud. The parameters should be imported using YMLParser. This is done with a rotation and translation of each point:

![\[ \begin{pmatrix} x_\mathrm{rgb} \\ y_\mathrm{rgb} \\ z_\mathrm{rgb} \end{pmatrix} = R \cdot \begin{pmatrix} x_\mathrm{d} \\ y_\mathrm{d} \\ z_\mathrm{d} \end{pmatrix} + T = \begin{pmatrix} r_{11} & r_{12} & r_{13} \\ r_{21} & r_{22} & r_{23} \\ r_{31} & r_{32} & r_{33} \end{pmatrix} \cdot \begin{pmatrix} x_\mathrm{d} \\ y_\mathrm{d} \\ z_\mathrm{d} \end{pmatrix} + \begin{pmatrix} t_1 \\ t_2 \\ t_3 \end{pmatrix}, \]](form_14.png)

where  and

and  are the extrinsic parameters of the Kinect and describe a rotation and translation.

are the extrinsic parameters of the Kinect and describe a rotation and translation.

Consider that the z-values should be converted into meters (rawToMeter()) before calling this function for a correct reconstruction.

| rot | Rotation-matrix (  ) of the Kinect as written in the specific yml-file and imported using YMLParser. ) of the Kinect as written in the specific yml-file and imported using YMLParser. |

| trans | Translation-vector (3-dimensional) of the Kinect as written in the specific yml-file and imported using YMLParser. |

| void KinectCloud::computeRgbMapping | ( | std::vector< std::vector< float > > | rgbCam | ) |

Computes the mapping between the point-cloud and the equivalent points of the RGB-image by reprojecting the point  of the point-cloud to its equivalent point

of the point-cloud to its equivalent point  in the plane using the intrinsics of the RGB-camera imported by YMLParser. The projection is done with the following formula:

in the plane using the intrinsics of the RGB-camera imported by YMLParser. The projection is done with the following formula:

where  contains the intrinsic parameters of the RGB-camera.

contains the intrinsic parameters of the RGB-camera.

Consider that the depth-image needs to be projected into the 3D-space using (depthToCloud(std::vector< std::vector<float> > depthCam)) before calling this function for correct reconstruction.

| rgbCam | Intrisic parameters of the RGB-cam as a  -matrix as written in the specific yml-file and imported using YMLParser. -matrix as written in the specific yml-file and imported using YMLParser. |

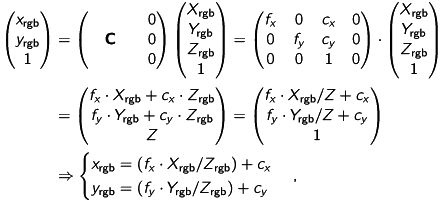

| void KinectCloud::depthToCloud | ( | std::vector< std::vector< float > > | depthCam | ) |

Applies the intrinsic camera-parameters to the point-cloud. The parameters should be imported using YMLParser. With the help of these parameters, each point  of the depth-image with its depth-value

of the depth-image with its depth-value  is projected on its equivalent point

is projected on its equivalent point  in 3D-space regarding its z-value and the depth-camera-parameters. This is done with the following formula:

in 3D-space regarding its z-value and the depth-camera-parameters. This is done with the following formula:

where  contains the intrinsic parameters of the depth-camera. Notice that the z-value doesn't change during this procedure and is already available at the depth-image.

contains the intrinsic parameters of the depth-camera. Notice that the z-value doesn't change during this procedure and is already available at the depth-image.

Consider that the extrinsic parameters should already be applied to the point-cloud (applyExtrinsics(std::vector< std::vector<float> > rot, std::vector<float> trans)) before calling this function.

| depthCam | Intrisic parameters of the depth-cam as a  -matrix as written in the specific yml-file and imported using YMLParser. -matrix as written in the specific yml-file and imported using YMLParser. |

| std::vector< std::vector< float > > KinectCloud::getCloud | ( | ) |

Get the cloud.

| std::vector< std::vector< int > > KinectCloud::getCloudRgb | ( | ) |

Get the RGB-cloud.

| std::vector< std::vector< int > > KinectCloud::getCloudRgbMapping | ( | ) |

Get the cloud-RGB-mapping.

| std::vector< bool > KinectCloud::getReconstructionSteps | ( | ) |

Get the reconstruction-steps.

recon indicate: recon[0]: Raw depth-values of the Kinect converted into meters using rawToMeter(). recon[1]: The intrinsic parameters of the depth-camera were applied using depthToCloud(std::vector< std::vector<float> > depthCam). recon[2]: The extrinsic camera-parameters were applied using applyExtrinsics(std::vector< std::vector<float> > rot, std::vector<float> trans). recon[3]: The intrinsic parameters of the RGB-camera were applied using computeRgbMapping(std::vector< std::vector<float> > rgbCam). recon[4]: Normals were reconstructed using computeNormals() – not implemented yet.) | std::vector< bool > KinectCloud::getValidValues | ( | ) |

Get a vector which indicates if a point of the point-cloud is valid (  ).

).

points if it is valid (

points if it is valid (  ).

). | void KinectCloud::rawToMeter | ( | ) |

Converts the added depth-values of the Kinect (  ) into meter using the formula

) into meter using the formula

![\[ Z_\mathrm{meter} = \frac{1}{Z_\mathrm{raw} \cdot (-0.0030711016) + 3.3309495161}, \]](form_7.png)

which was experimentaly determined (http://nicolas.burrus.name/index.php/Research/KinectCalibration).

Consider that the z-values must be added (addZValues(int* zValues)) before before calling this function.

std::vector< std::vector<float> > KinectCloud::cloud [private] |

The point-cloud

std::vector< std::vector<int> > KinectCloud::cloudRgb [private] |

The RGB-values

std::vector< std::vector<int> > KinectCloud::cloudRgbMapping [private] |

Coordinates of a point's corresponding color at the RGB-image

std::vector<bool> KinectCloud::reconstructionSteps [private] |

Reconstruction flags

std::vector<bool> KinectCloud::validValues [private] |

Valid flags for each point